Predicting Acoustics: The auralization of dynamic, real-time virtual environments using supervised learning.

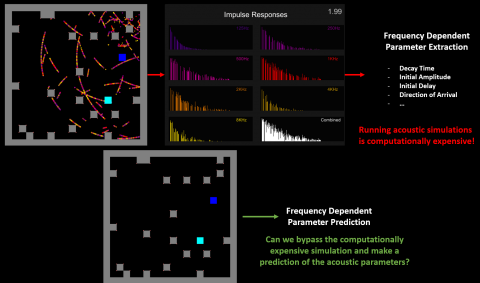

The computational complexity of simulating acoustics makes auralization difficult to achieve in highly dynamic, real-time video game virtual environments. Current methods are either too computationally intensive to run in real-time or require offline baking of acoustic characteristics making it difficult to be used in dynamic environments. Current advancements in machine learning and artificial intelligence may make acoustic prediction a viable path to auralizing virtual environments. This research focuses on training a model using supervised learning to make predictions on audio parameters to mimic perceptually plausible sound wave reflections and diffractions in a virtual environment, simply with a scan of the surrounding environment and without any live acoustic simulations. As video games are creative ventures, methods of manipulating a black box audio model for creative control are also explored. The effect on player experience of auralizing virtual spaces is also investigated with focus on the allowable error of a predictive model to maintain player immersion and perceptually plausible audio.

Important Audio Characteristics that currently aren’t modelled often in games:

Sound Wave Reflection:

- Characterised by the change in direction of a sound wave caused by bouncing off an object.

- Early Reflections: Distinct repetitions of the original sound.

- Late Reverberation: Reflection off nearby objects form superpositions distorting the original sound.

Sound Wave Diffraction:

- The bending of sound waves around obstacles.

- Obstruction/Occlusion: Sound wave is weakened based on collisions with blocking objects.

- Sound Redirection: Changes to the observed position of the original sound.

What is auralization?

Auralization is the process of rendering the acoustics of a space through simulation.

What are the current methods for auralization?

There are two main categories of methods.

- Geometric Acoustics:

- The assumption that sound travels as particles (in reality they travel as waves) in order to reduce the complexity.

- Generally uses ray-tracing techniques to keep track of those sound particles and their interactions with the environment.

- Less accurate than numerical acoustics but a lot faster to compute. Still struggles to run in real-time.

- Numerical Acoustics:

- Solves the wave equations to determine exactly how the sound would propagate in a real environment.

- Much more accurate than geometric acoustics but is 1000 times too slow to be used in real-time.

Possible solution to auralization for real-time dynamic virtual environments and research questions.

- Can we bypass the need to simulate the acoustics of a virtual environment and instead use an AI model to make predictions of certain parameters to mimic the acoustics?

- What is the allowable error of a predictive method to still produce sounds that a standard player would still think is what the sound should be like?

- How does real-time auralization improve on the player experience compared to current methods?